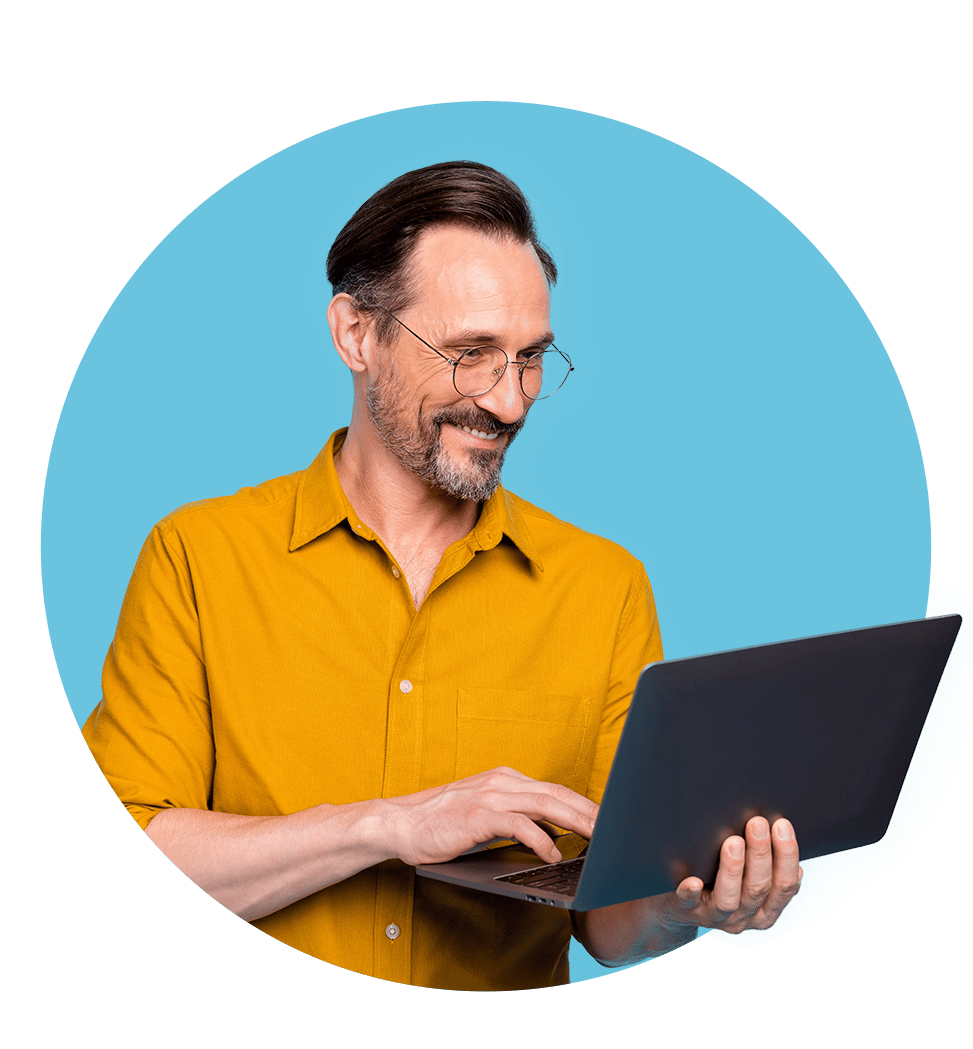

You’ve got the drive. We clear the path.

With OTAVA® on your side, you don’t have to be a cloud expert. We’ve got you covered, offering people-orchestrated solutions and services that are fine-tuned to your ambitions and engineered to succeed.

- Solutions and strategies purpose-built to meet your needs and budgets

- Security and compliance strong enough for the most regulated industries

- Sophisticated data resilience and protection powered by leading technologies

- Consultative and managed services to help you stay ahead of critical issues

Everything we do is backed by a human approach that prioritizes relationships, collaboration, and best-in-class service — giving you the freedom and support to reach your full potential.